While AI systems are remarkably adept at handling text-based queries and accessing specialist knowledge, which is challenging for a general radiologist, they stumble significantly when detailed image interpretation is required, with accuracy dropping by almost 40% compared to human radiologists.Conversely, radiologists shine in their natural domain - interpreting all type of medical images confidently, recognising imaging signs and integrating visual information - maintaining superior performance in image-dependent and atypical cases.In our comparative analyses of the performance in solving MCQs featuring on the Radiopaedia website, the overall score of the best AI – the 06/2024 online version of Chat GPT-4o was with 67% between radiologists (74%) and residents (64%). GPT-4o notably performed only 56% on the same question set when an updated version was tested in 11/2024. Despite of the small sample size, we have gained a pretty good insight into how the two AI systems are much less performant in image analysis than anticipated from their text processing skills.

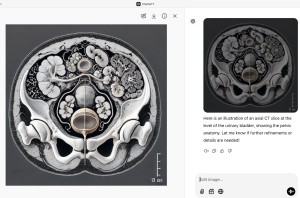

The subgroup analyses we conducted allowed us to address the intriguing question: does multimodal AI truly understand submitted text or images? AI employs language models that analyze statistical relationships between words and image patterns to predict and produce coherent responses. However, it does not possess consciousness; its output is based on learned patterns rather than true understanding. This implies that while AI can effectively process and generate text and pictures in a way that appears meaningful, it lacks genuine comprehension or awareness—currently hindering its practical use in real-world medical settings. An illustrative example is provided.